- Individuals and interactions above processes and tools.

- Software running on top of extensive documentation.

- Collaboration with the client over contractual negotiation.

- Response to change over following a plan.

Table of Contents

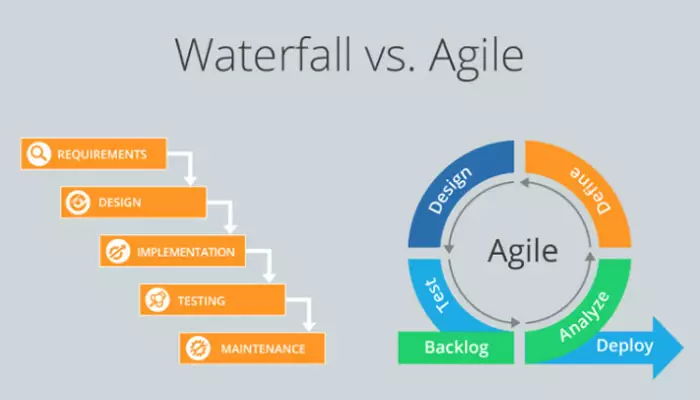

Before agile: the waterfall methodology

Veterans like me remember when the waterfall methodology was the gold standard for software development. Using the waterfall method required a lot of documentation up front, before you started coding. Typically, the process began with a business analyst writing a business requirements document that outlined what the business needed from the application. These documents were long and detailed, containing everything from the overall strategy to full functional specifications and user interface designs.- Technologists used the business requirements document to develop a technical requirements document.

- This document defined the application architecture, data structures, functional object-oriented designs, user interfaces, and other non-functional requirements .

- Once the business and technical requirements documents were complete, the developers began coding, then integration, and finally testing .

- All of this had to be done before an application was considered production ready. The whole process could easily take a couple of years.

The waterfall methodology in practice

The documentation used for the waterfall methodology was called “the specification”, and developers were expected to know it as well as its authors. You could be reprimanded for not correctly implementing a key detail, say, noted on page 77 of a 200-page document.- Software development tools also required specialized training, and there weren’t as many tools to choose from.

- We do all the low-level stuff ourselves, like opening database connections and multithreading our data.

- Even for basic applications, teams were large and communication tools were limited. Our technical specifications aligned us, and we took advantage of them like the Bible.

- If a requirement changed, we put company managers through a lengthy review and approval process.

- Communicating the changes to the entire team and correcting the code were expensive procedures.

- Since software was developed based on the technical architecture, lower-level artifacts were developed first and dependent artifacts came later.

- Tasks were assigned by skill, and it was common for database engineers to build tables and other database artifacts first.

- Application developers coded the functionality and business logic next, with the user interface overlaid last. Months passed before anyone saw the app working.

- By this time, stakeholders were often nervous, and often more savvy about what they really wanted. Not surprisingly, implementing the changes was so expensive.

Pros and cons of the waterfall methodology

Invented in 1970, the waterfall methodology was revolutionary because it brought discipline to software development and ensured that there was a clear specification to follow. It was based on the waterfall manufacturing method, derived from Henry Ford’s assembly line innovations in 1913, which provided security over every step of the production process. The waterfall method was intended to ensure that the end product matched what was originally specified. When software teams began adopting the waterfall approach, IT systems and their applications used to be complex and monolithic, requiring discipline and clear deliverables to deliver. Requirements were also slow to change compared to today, making large-scale efforts less of a problem. In fact, systems were built on the assumption that they would not change, but would instead be perpetual dreadnoughts . Multi-year terms were common not only in software development, but also in manufacturing and other business activities. But the rigidity of the waterfall became its downfall as we entered the Internet age, and speed and flexibility became more valuable .The turn to agile methods

Software development began to change when developers started working on Internet applications . Much of the early work was done in start-ups where teams were smaller, co-located, and often lacked traditional computer training.- There were financial and competitive pressures to bring websites, applications, and new capabilities to market faster. Development tools and platforms changed rapidly in response.

- This made many of us who worked in startups question the waterfall methodology and look for ways to be more efficient.

- We couldn’t afford to do all the detailed documentation up front and needed a more iterative and collaborative process.

- We were still discussing changes to the requirements, but were more open to experimentation and adapting our software based on user feedback.

- Our organizations were less structured and our applications were less complex than legacy corporate systems, so we were more open to building rather than buying applications.

- More importantly, we were trying to grow businesses, so when users told us something was wrong, we usually listened.

- Having the skills and capabilities to innovate became strategically important.

Why Agile Development Delivers Better Software

When you take the set of agile principles, implement it in an agile framework, take advantage of collaboration tools, and adopt agile development practices, you typically get better quality applications that are faster to develop. Better technical methods are also obtained, that is, hygiene.Agile Development principles and reasons

- The main reason is that agility is designed for flexibility and adaptability.

- It is not necessary to define all responses in advance, as is done in the waterfall method. Instead, it breaks the problem down into digestible components that are then developed and tested with users.

- If something doesn’t work well or as expected, or if the effort reveals something that hadn’t been accounted for, you can adapt the effort and get back on track quickly, or even change lanes if necessary.

- Agile allows each team member to contribute to the solution and requires each to take personal responsibility for their work.

- Agile principles, frameworks, and practices are designed for today’s operating conditions.

- Agile typically prioritizes iterative development and leveraging feedback to improve the application and the development process.

- Both iteration and feedback are well suited to today’s world of smarter, faster operation.

- Agile development also encourages continuous improvement . Imagine that Microsoft stopped developing Windows after version 3.1, or that Google stopped improving its search algorithms in 2002.

- Software constantly needs to be updated, supported, and improved; Agile methodology establishes both a mindset and a process for that continuous improvement.